Failures

Image Quantity and Quality

While most parameters—such as model settings, data pipeline configurations, and optimization settings—work reasonably well out of the box, I found two factors especially critical for the quality of Gaussian splatting and sparse scene reconstruction.

The first is the number of input images. In my initial experiments, I used only one frame out of every 15–20 frames, which meant discarding many valuable images from the video. This led to reconstruction errors caused by the low frame rate. When I increased the number of frames retained from 20 down to 15 between images, the reconstruction improved significantly while keeping the computational cost manageable.

The second factor is the number of optimization iterations. The default of 30,000 iterations produces good results, but longer runs can further improve quality; in one experiment, I extended training to 93,000 iterations.

A third limiting factor is image resolution. The resulting mesh quality was relatively low, largely due to a combination of limited frames and low-resolution input images. Image resolution is constrained by available GPU memory—higher resolution images require GPUs with greater memory capacity.

Recap:

Successes

There are many steps in this pipeline where errors can occur, so I highly recommend a process of experimentation and trial-and-error.

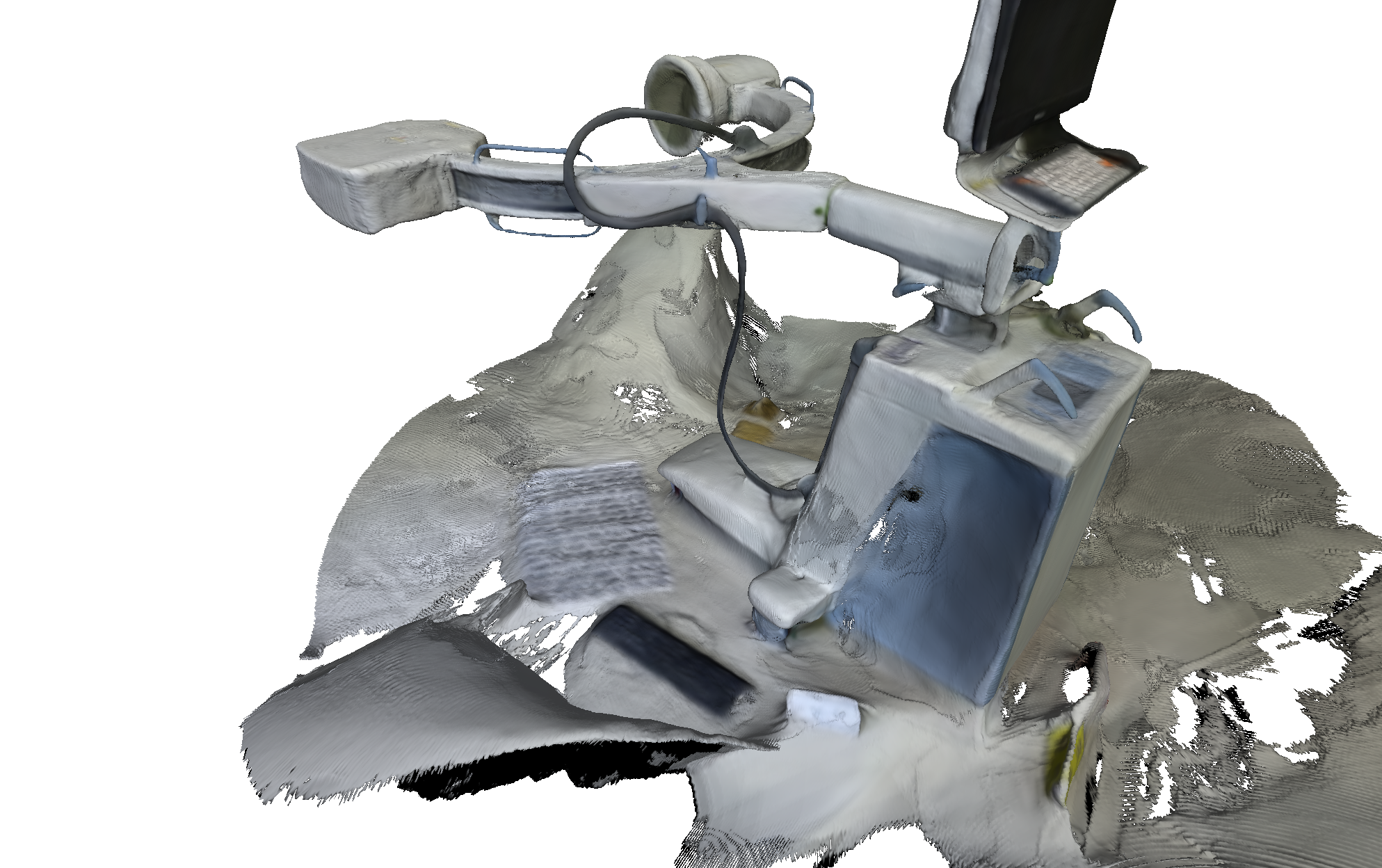

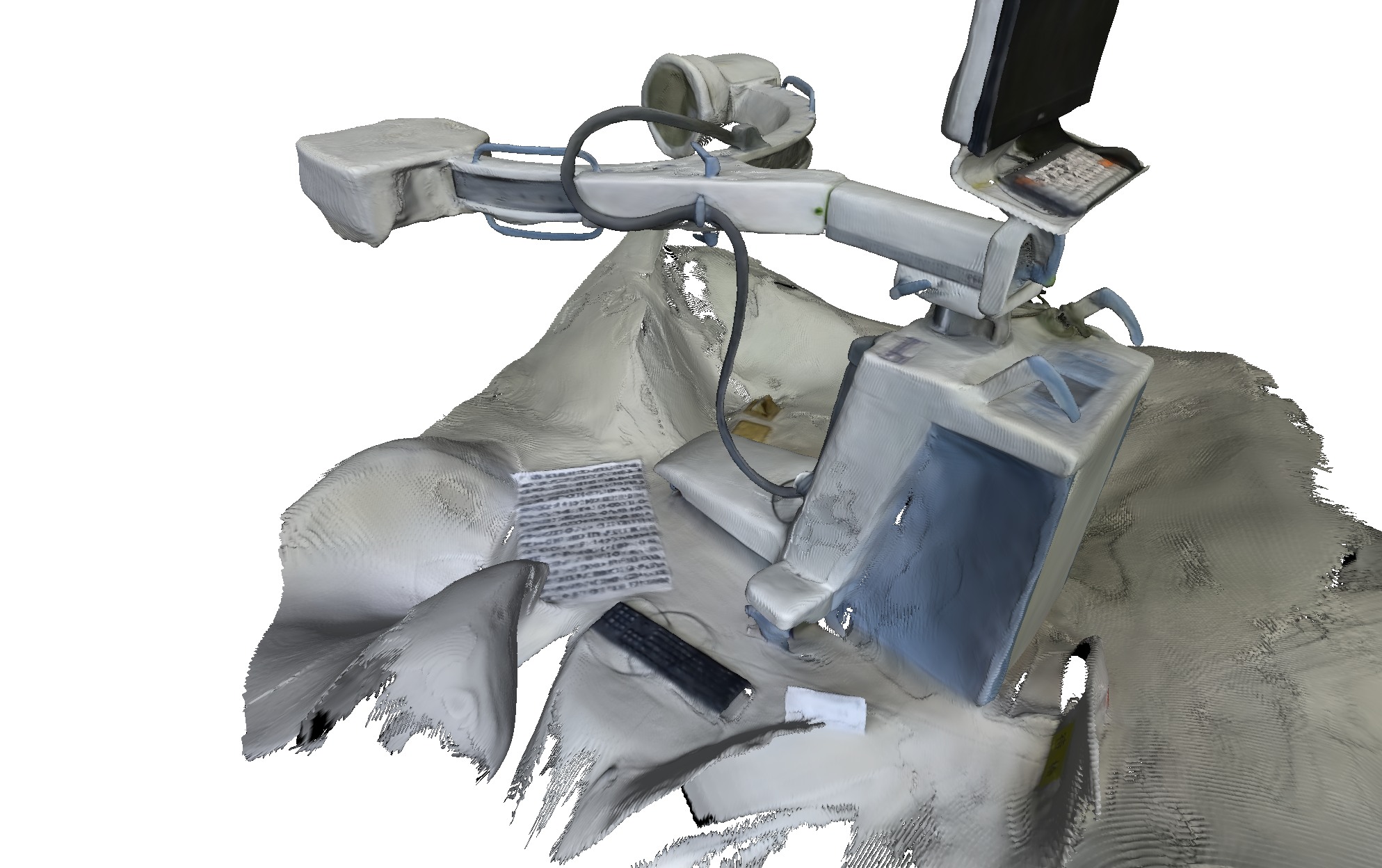

A word of caution: early-stage errors tend to propagate and can severely degrade final results. For example, if COLMAP’s sparse reconstruction is inaccurate or fails, subsequent Gaussian splatting will produce flawed outputs. This was evident in the medial-lateral C-arm Gaussian splatting result, where an extra phantom arm appeared—an artifact not present in the real object. This mistake did not creep in during Gaussian splatting step, but rather by misalignment during COLMAP’s sparse reconstruction, which assigned incorrect camera poses. Consequently, the Gaussian splats were misplaced based on these faulty poses.

Videos and Images

-

Capture images with good texture. Avoid completely texture-less images (e.g., a white wall or empty desk). If the scene does not contain enough texture itself, you could place additional background objects, such as posters, etc.

-

Capture images at similar illumination conditions. Avoid high dynamic range scenes (e.g., pictures against the sun with shadows or pictures through doors/windows). Avoid specularities on shiny surfaces.

-

Capture images with high visual overlap. Make sure that each object is seen in at least 3 images – the more images the better.

-

Capture images from different viewpoints. Do not take images from the same location by only rotating the camera, e.g., make a few steps after each shot. At the same time, try to have enough images from a relatively similar viewpoint. Note that more images is not necessarily better and might lead to a slow reconstruction process. If you use a video as input, consider down-sampling the frame rate.

Future Work and Promising Algorithms

The field of computer vision is advancing rapidly, with new papers and techniques emerging almost daily that overcome technical challenges. As a result, future algorithms are likely to provide faster, higher-quality, and more practical 3D reconstructions.

I am particularly excited about exploring Gaussian Splatting for dynamic scenes—that is, handling temporal changes to capture 4D information, rather than just static 3D scenes like the C-arm machine example above.