Introduction

Today I would like to share with you on some basic operations:

- Reading a video

- Detecting and quantifying change of motion via optical flow algorithm

- Measuring action duration

- Writing a video

The motivation comes from a recent job interview I had. During Q and A session of the interview, I was asked to use a simple algorithm to count the number of repetitions a subject has performed in a short video. So what I am about to share may be of some use.

Methods

# -*- coding: utf-8 -*-

import cv2

import numpy as np

from skimage.measure import label, regionprops

def calculate_area(threshold, arr, status):

mask = np.greater_equal(arr, threshold)

mask = np.all(mask, axis=-1)

label_image = label(mask)

biggest = 0

for region in regionprops(label_image):

if region.area >= biggest:

biggest = region.area

# print ('biggest area for ', status, biggest)

return biggest

################# config ###################

font = cv2.FONT_HERSHEY_SIMPLEX

bottomLeftCornerOfText = (150,150)

fontScale = 1

fontColor = (255,255,255)

lineType = 2

'''

BGR format (default of openCV)

color intensity thresholds

'''

up_threshold = np.array([[[100,0,0]]])

area_threshold = 5000

down_threshold = np.array([[[0,50,0]]])

rep = []

'''

capture video stream but discard the audio stream

'''

cap = cv2.VideoCapture("test.mp4")

fps = cap.get(cv2.CAP_PROP_FPS)

print ("Frames per second : {0}".format(fps))

'''

MJPG codecs generate AVI videos

'''

fourcc = cv2.VideoWriter_fourcc('M','J','P','G')

# double the width to accommadate raw video and optical flow

out = cv2.VideoWriter('output_count.avi',fourcc, fps ,(int(2*cap.get(3)),int(cap.get(4))

))

ret, frame1 = cap.read()

prvs = cv2.cvtColor(frame1,cv2.COLOR_BGR2GRAY)

hsv = np.zeros_like(frame1)

hsv[...,1] = 255

i= 0

timestamps = []

while(cap.isOpened()):

i+=1

ret, frame2 = cap.read()

if not(ret): break

next = cv2.cvtColor(frame2,cv2.COLOR_BGR2GRAY)

if i == 1:

flow = cv2.calcOpticalFlowFarneback(prvs,next, None, 0.5, 3, 15, 3, 5, 1.2, 0)

else:

flow = cv2.calcOpticalFlowFarneback(prvs,next, flow, 0.5, 3, 15, 3, 5, 1.2, 0)

mag, ang = cv2.cartToPolar(flow[...,0], flow[...,1])

hsv[...,0] = ang*180/np.pi/2

hsv[...,2] = cv2.normalize(mag,None,0,255,cv2.NORM_MINMAX)

rgb = cv2.cvtColor(hsv,cv2.COLOR_HSV2BGR)

d1 = calculate_area(down_threshold, rgb, 'down')

u1 = calculate_area(up_threshold, rgb, 'up')

if d1 > area_threshold:

if u1 > area_threshold:

if d1 > u1:

status = 'down'

else:

status = 'up'

else:

status = 'down'

elif u1 > area_threshold:

status = 'up'

else:

status = None

if status:

if len(rep) == 0:

rep.append(status)

timestamps.append(i)

if rep[-1] != status:

rep.append(status)

timestamps.append(i)

print (i, status, timestamps, rep, rep.count('down')-1)

cv2.putText(frame2, "Repetitions: "+ str(rep.count('down')-1),bottomLeftCornerOfText,font, fontScale,fontColor,lineType)

frame2 = np.concatenate((frame2, rgb), axis=1)

out.write(frame2)

prvs = next

# if i > 30:

# break

print("Video Generated")

out.release()

cap.release()

cv2.destroyAllWindows()

################# rate of motion ###############

rates = np.diff(timestamps)

down_up = np.array(rates[0:-1:2]) * 1/fps

up_down = np.array(rates[1::2] ) * 1/fps

import matplotlib.pyplot as plt

X = np.linspace(0,0.5,num=2)

fig = plt.figure()

ax = fig.add_axes([0,0,1,1])

ax.bar(X[0], np.mean(down_up), yerr=np.std(down_up), color = 'b', width = 0.25)

ax.bar(X[1], np.mean(up_down), yerr=np.std(up_down), color = 'g', width = 0.25)

# ax.legend()

ax.set_xticks(X)

ax.set_xticklabels(['down_to_up', 'up_to_down'])

ax.set_ylabel('time taken to perform a motion in seconds')

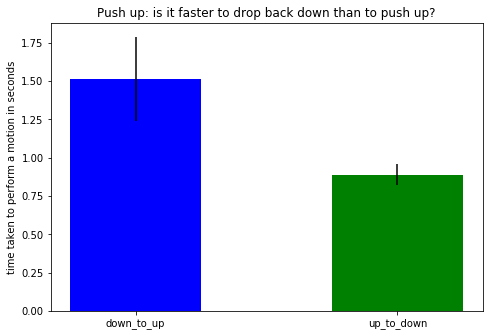

plt.title('Push up: is it faster to drop back down than to push up?')

plt.savefig('rate.png', bbox_inches = 'tight' , transparent=True)

Optical flow measures the u and v velocity vectors (horizontal and vertical) by assuming that adjacent frames are similar except the object that has moved. After watching the video, I believe this assumption is largely valid. The background is mostly static with exception I shall discuss later in the result section and its intensity remains constant throughout. The algorithm reads the video frame by frame and calculates FarneBack (optical flow algorithm) on sequential frames.

Dense optical flow such as FarneBack has a benefit over sparse optical flow algorithms. Unlike a sparse optical flow algorithm which tracks the movement of targeted markers or points, it does not need to track feature points on the torso. The torso features are unnecessary as the torso itself occupies a large area of the center in each frame. It is worth noting that, despite using the previous flow value as the initialization of the next frame, the final flow appears to be the same with or without initialization.

Results

When someone pushes up, we look for directional change of the torso. By mapping the flow vectors to the RGB color space, we can intuitively “see” the motion vector in action.

Left panel shows the raw video along with the repetition counts written in white. Right panel shows the optical flow magnitude for the most recent 2 frames.

At the time when the torso changes its direction (either at the top or at the ground / bottom), optical flow quantifies the vector change which can be easily seen in color above. That change occurs predominantly at a specific direction (shown in a particular color) and occurs with a large group of pixels outlining the torso of the subject. Thus, a simple thresholding is sufficient to trigger and record the signal. Going back to the camera motion noise, on some specific frames, we observe non-zero optical flow values across the entire background. I suspect this is due to the camera motion as I see the camera shifted amid the push up.

Typically pushing upward is more effortful than dropping down. We can demonstrate this in the video by calculating the time elapsed between pushing up and dropping down. The frame at which direction has changed tells us the time of the occurrences and the difference between them indicates their duration. The time from down position to up position is plotted in blue; from up position to down position in green. More time taken in the pushing up than dropping down.

Conclusions

I hope this short tutorial is helpful for your next interview.